Image and Real-time Video Style Transfer

Details

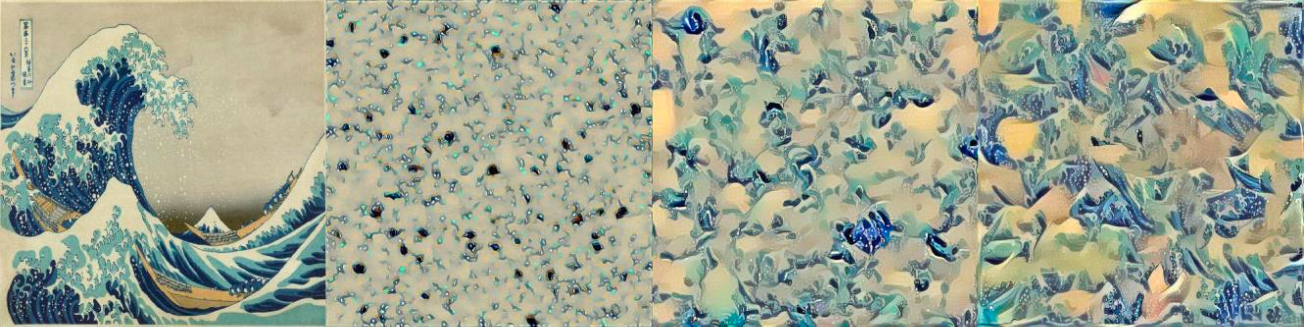

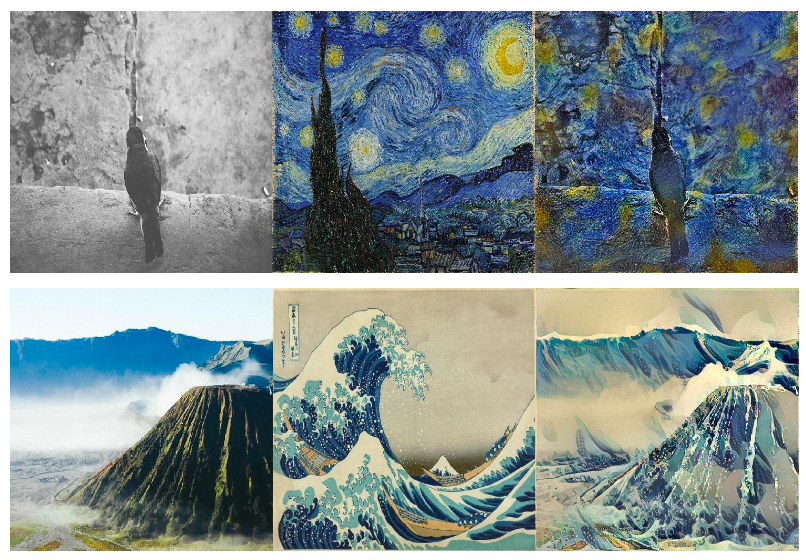

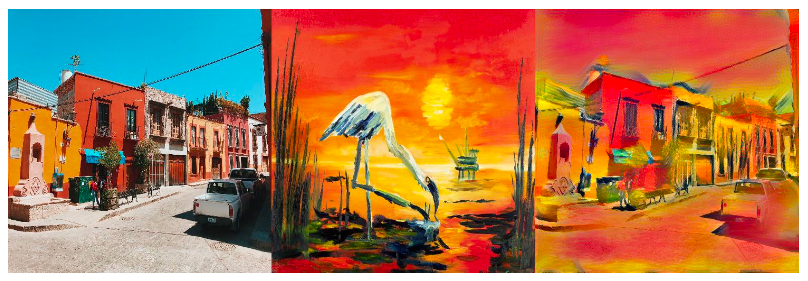

The term “style transfer” has been coined to describe the problem of training machines to “roughly” mimic human’s ability to interplay different image styles to create unique and complex visual experiences. To put it simply, style transfer is the problem of recomposing images in the style of other images; that is, given an original image, and a second image with specific style, we would like to create a new image with the style of the second image applied to the content of the original image. Even though it does not fully explain how humans create unique art pieces, style transfer is definitely the right logical step towards solving the more complex problem of understanding how humans create and perceive arts.

We used the algorithm introduced by Gatys et al. as the basis of this project. With a pre-trained VGG model and a clever loss setup, Gatys et al. algorithm is able to achieve high-quality results and also provides insights into image representations learned by Convolutional Neural Networks and empirically demonstrate CNNs’ potential for high level image synthesis and manipulation [1]. We also implemented an extension of this method proposed by Johnson et al. in order to speed up styling process. This extension allows for fast real time style transfer.

To obtain a style representation from the style input image, we constructed a Gram matrix which can be built on top of the feature map in any layer of VGG16. The Gram matrix consists of the correlations between different filter responses, and thus captures the texture information of an image.

The basic intuition behind Gram matrix is that by multiplying elements of each combination of feature maps together, we are essentially checking if the elements “overlap” at their location in the image. The summation then discards all the information’s spatial relevance.